Data Laced with History: Causal Trees & Operational CRDTs

Mar 24, 2018 programming

(Sorry about the length! At some point in the distant past, this was supposed to be a short blog post. If you like, you can skip straight to the demo section to get a sense of what this article is about.)

Embarrassingly, most of my app development to date has been confined to local devices. Programmers like to gloat about the stupendous mental castles they build of their circuitous, multi-level architectures, but not me. In truth, networks leave me quite perplexed. I start thinking about data serializing to bits, servers performing their arcane handshakes and voting rituals, merge conflicts pushing into app-space and starting the whole process over again—and it all just turns to mush in my head. For peace of mind, my code needs to be locally provable, and this means things like idempotent functions, decoupled modules, contiguous data structures, immutable objects. Networks, unfortunately, throw a giant wrench in the works.

Sometime last year, after realizing that most of my document-based apps would probably need to support sync and collaboration in the future, I decided to finally take a stab at the problem. Granted, there were tons of frameworks that promised to do the hard work of data model replication for me, but I didn’t want to black-box the most important part of my code. My gut told me that there had to be some arcane bit of foundational knowledge that would allow me to sync my documents in a refined and functional way, decoupled from the stateful spaghetti of the underlying network layer. Instead of downloading a Github framework and smacking the build button, I wanted to develop a base set of skills that would allow me to easily network any document-based app in the future, even if I was starting from scratch.

The first order of business was to devise a wishlist for my fantastical system:

- Most obviously, users should be able to edit their documents immediately, without even touching the network. (In other words, the system should only require optimistic concurrency.)

- Sync should happen in the background, entirely separate from the main application code, and any remote changes should be seamlessly integrated in real-time. (The user shouldn’t have to notice that the network is down.)

- Merge should always be automatic, even for concurrent edits. The user should never be faced with a “pick the correct revision” dialog box.

- A user should be able to work on their document offline for an indefinite period of time without accruing “sync debt”. (Meaning that if, for example, sync is accomplished by sending out mutation events, performance should not suffer even if the user spends a month offline and then sends all their hundreds of changes at once.)

- Secondary data structures and state should be minimized. Most of the extra information required for sync should be stored in the same place as the document, and moving the document to a new device should not break sync. (No out-of-band metadata or caches!)

- Network back-and-forth should be condensed to a bare minimum, and rollbacks and re-syncs should practically never happen. To the greatest possible degree, network communication should be stateless and dumb.

- To top it all off, my chosen technique had to pass the “PhD Test”. That is to say, one shouldn’t need a PhD to understand and implement the chosen approach for custom data models!

After mulling over my bullet points, it occurred to me that the network problems I was dealing with—background cloud sync, editing across multiple devices, real-time collaboration, offline support, and reconciliation of distant or conflicting revisions—were all pointing to the same question: was it possible to design a system where any two revisions of the same document could be merged deterministically and sensibly without requiring user intervention? Sync, per se, wasn’t the issue, since getting data from one device to another was essentially a solved problem. It’s what happened after sync that was troubling. On encountering a merge conflict, you’d be thrown into a busy conversation between the network, model, persistence, and UI layers just to get back into a consistent state. The data couldn’t be left alone to live its peaceful, functional life: every concurrent edit immediately became a cross-architectural matter. On the other hand, if two documents could always be made to merge, then most of that coordination hullabaloo could go out the window. Each part of the system could be made to work at its own pace.

Whether stored as a record in a database or as a stand-alone file, a document could be interpreted as a collection of basic data fields: registers, sequences, dictionaries, and so forth. Looking at the problem from a database perspective, it was actually quite simple to automatically resolve merge conflicts in this kind of table row: just keep overwriting each field with the version sporting the highest timestamp, logical or otherwise. (Ignoring issues of inter-field consistency for now.) Of course, for anything other than basic registers, this was a terrible approach. Sequences and dictionaries weren’t just blobs of homogeneous data that were overwritten with every change, but complex, mutable structures that users were editing on a granular level. For such a fundamental problem, there was a surprising dearth of solutions out in the real world: most systems punted the task to app-space by asking the client to manually fix any merge conflicts or pick the correct version of a file. It seemed that if the problem of automatic merge for non-trivial data types could be solved—perhaps by exposing their local, type-specific mutation vocabulary to the storage and replication layers?—then a solution to the higher-level problem of automatic document merge would fall within reach.

In hope of uncovering some prior art, I started by looking at the proven leader in the field, Google Docs. Venturing down the deep rabbit hole of real-time collaborative editing techniques, I discovered that many of the problems I faced fell under the umbrella of strong eventual consistency. Unlike the more conventional strong consistency model, where all clients receive changes in identical order and rely on locking to some degree, strong eventual consistency allows clients to individually diverge and then arrive at a final, consistent result once each update has been received. (Or, in a word, when the network is quiescent.)

There were a number of tantalizing techniques to investigate, and I kept several questions in mind while doing my analysis. Could a given technique be generalized to arbitrary and novel data types? Did the technique pass the PhD Test? And was it possible to use the technique in an architecture with smart clients and dumb servers?

The reason for that last question was CloudKit Sharing, a framework introduced in iOS 10. For the most part, this framework functioned as a superset of regular CloudKit, requiring only minor code changes to enable document sharing in an app. A developer didn’t even have to worry about connecting users or dealing with UI: Apple did most of the hard work in the background while leveraging standard system dialogs. But almost two years later, on the order of no one seemed to be using it. Why was this? Most other Apple APIs tended to be readily adopted, especially when they allowed the developer to expand into system areas which were normally out of bounds.

My hunch was that CloudKit Sharing forced the issue of real-time collaboration over a relatively dumb channel, which was a task outside the purview of conventional sync approaches. CloudKit allowed developers to easily store, retrieve, and listen for new data, but not much else besides. No third-party code was allowed to run on Apple’s servers, so merge conflicts had to be handled locally. But unlike in the single-user case, which presented limited opportunities for concurrent edits, you couldn’t just pop up a merge dialog every time another participant in your share made a change to your open document. The only remaining options seemed to be some sort of ugly, heuristic auto-merge or data-dropping last-write-wins, neither of which was acceptable for real-time use. Collaboration along the lines of Google Docs appeared to be impossible using this system! But was it really?

I realized that this was my prize to be won. If I could figure out a way to develop auto-merging documents, I’d be able to implement sync and collaboration in my apps over CloudKit while using Apple’s first-party sharing UI—all without having to pay for or manage my own servers. So this became my ultimate research goal: a collaborative iPhone text editing demo that synced entirely over CloudKit. (And here’s a spoiler: it worked!)

Convergence Techniques: A High-Level Overview

There are a few basic terms critical to understanding eventual consistency. A network is comprised of sites (“devices”, “peers”) operating in parallel, each one producing operations (“events”, “actions”) that mutate the data and exchange information with other sites. The first vital concept here is causality. An operation is caused by another operation when it directly modifies or otherwise involves the results of that operation, and determining causality is critical to reconstructing a sensible timeline (or linearization) of operations across the network. (An operation that causes another operation must always be ordered first.) However, we can’t always determine direct causality in a general way, so algorithms often assume that an operation is causally ahead of another one if the site generating the newer operation has already seen the older one at the time of its creation. (In other words, every operation already seen by a site at the time a new operation is created is in that operation’s causal past.) This “soft” causality can be determined using a variety of schemes. The simplest is a Lamport timestamp, which requires that every new operation have a higher timestamp than every other known operation, including any remote operations previously received. Although there are eventual consistency schemes that can receive operations in any order, most algorithms rely on operations arriving at each site in their causal order (e.g. insert A necessarily arriving before delete A). When discussing convergence schemes, we can often assume causal order since it can be implemented fairly mechanically on the transport layer. If two operations don’t have a causal relationship—if they were created simultaneously on different sites without knowledge of each other—they are said to be concurrent. Most of the hard work in eventual consistency involves dealing with these concurrent operations. Generally speaking, concurrent operations have to be made to commute, or have the same effect on the data regardless of their order of arrival. This can be done in a variety of ways1.

Given their crucial role in event ordering, different schemes for keeping time are worth expanding on a bit. First, there’s standard wall clock time. Although this is the only timestamp that can record the exact moment at which an event occurred, it also requires coordination between sites to correct clock drift and deal with devices whose clocks are significantly off. (Otherwise, causality violations may occur.) The server in a typical client-server architecture can serve as the authoritative clock source, but this sort of easy solution isn’t available in a true distributed system. Instead, the common approach is to use a logical timestamp, which takes logical timestamps from previous events as input and doesn’t require any coordination or synchronization to work. Lamport timestamps, mentioned above, are the simplest kind of logical timestamp, but only provide a rough sense of event causality. In order to know for certain whether two events are concurrent or sequential, or to tell which events might be missing from causal order, a more advanced timestamp such as a version vector needs to be used. Version vectors (or their close relatives, vector clocks) give us very precise information about the order and causality of events in a distributed system, but come with potentially untenable space complexity, since each timestamp has to include a value for every previously-seen site in the network. You can read in detail about the different kinds of logical clocks in this handy ACM Queue article.

Finally, there’s the notion of an event log. Usually, operations in a distributed system are executed and discarded on receipt. However, we can also store the operations as data and play them back when needed to reconstruct the model object. (This pattern goes by the name of event sourcing in enterprise circles.) An event log in causal order can be described as having a partial order, since concurrent operations may appear in different positions on different sites depending on their order of arrival. If the log is guaranteed to be identical on all devices, it has a total order. Usually, this can be achieved by sorting operations first by their timestamp, then by some unique origin ID. (Version vectors work best for the timestamp part since they can segregate concurrent events by site instead of simply interleaving them.) Event logs in total order are especially great for convergence: when receiving a concurrent operation from a remote site, you can simply sort it into the correct spot in the event log, then play the whole log back to rebuild the model object with the added influence from the new operation. But it’s not a catch-all solution, since you can run into a O(n2) complexity wall depending on how your events are defined.

Now, there are two competing approaches in strong eventual consistency state-of-the-art, both tagged with rather unappetizing initialisms: Operational Transformation (OT) and Conflict-Free Replicated Data Types (CRDTs). Fundamentally, these approaches tackle the same problem: given an object that has been mutated by an arbitrary number of connected devices, how do we coalesce and apply their changes in a consistent way, even when those changes might be concurrent or arrive out of order? And, moreover, what do we do if a user goes offline for a long time, or if the network is unstable, or even if we’re in a peer-to-peer environment with no single source of truth?

Operational Transformation

Operational Transformation is the proven leader in the field, notably used by Google Docs and (now Apache) Wave as well as Etherpad and ShareJS. Unfortunately, it is only “proven” insofar as you have a company with billions of dollars and hundreds of PhDs at hand, as the problem is hard. With OT, each user has their own copy of the data, and each atomic mutation is called an operation. (For example, insert A at index 2 or delete index 3.) Whenever a user mutates their data, they send their new operation to all their peers, often in practice through a central server. OT generally makes the assumption that the data is a black box and that incoming operations will be applied directly on top without the possibility of a rebase. Consequently, the only way to ensure that concurrent operations commute in their effect is to transform them depending on their order.

Let’s say Peer A inserts a character in a string at position 3, while Peer B simultaneously deletes a character at position 2. If Peer C, who has the original state of the string, receives A’s edit before B’s, everything is peachy keen. However, if B’s edit arrives first, A’s insertion will be in the wrong spot. A’s insertion position will therefore have to be transformed by subtracting the length of B’s edit. This is fine for the simple case of two switched edits, but it gets a whole lot more complicated when you start dealing with more than a single pair of concurrent changes. (An algorithm that deals with this case—and thus, provably, with any conceivable case—is said to be have the “CP2/TP2” property rather than the pairwise “CP1/TP1” property. Yikes, where’s the naming committee when you need it?) In fact, the majority of published algorithms for string OT actually have subtle bugs in certain edge cases (such as the so-called “dOPT puzzle”), meaning that they aren’t strictly convergent without occasional futzing and re-syncing by way of a central server. And while the idea that you can treat your model objects strictly in terms of operations is elegant in its premise, the fact that adding a new operation to the schema requires figuring out its interactions with every existing operation is nearly impossible to grapple with.

Conflict-Free Replicated Data Types

Conflict-Free Replicated Data Types are the new hotness in the field. In contrast to OT, the CRDT approach considers sync in terms of the underlying data structure, not the sequence of operations. A CRDT, at a high level, is a type of object that can be merged with any objects of the same type, in arbitrary order, to produce an identical union object. CRDT merge must be associative, commutative, and idempotent, and the resulting CRDT for each mutation or merge must be “greater” than than all its inputs. (Mathematically, this flow is said to form a monotonic semilattice. For more info and some diagrams, take a look at John Mumm’s excellent primer.) As long as each connected peer eventually receives the updates of every other peer, the results will provably converge—even if one peer happens to be a month behind. This might sound like a tall order, but you’re already aware of several simple CRDTs. For example, no matter how you permute the merge order of any number of insert-only sets, you’ll still end up with the same union set in the end. Really, the concept is quite intuitive!

Of course, simple sets aren’t enough to represent arbitrary data, and much of CRDT research is dedicated to finding new and improved ways of implementing sequence CRDTs, often under the guise of string editing. Algorithms vary, but this is generally accomplished by giving each individual letter its own unique identifier, then giving each letter a reference to its intended neighbor instead of dealing with indices. On deletion, letters are usually replaced with tombstones (placeholders), allowing two sites to concurrently reference and delete a character at the same time without any merge conflicts. This does tend to mean that sequence CRDTs perpetually grow in proportion to the number of deleted items, though there are various ways of dealing with this accumulated garbage.

One last thing to note is that there are actually two kinds of CRDTs: CmRDTs and CvRDTs. (Seriously, there’s got to be a better way to name these things…) CmRDTs, or operation-based CRDTs, only require peers to exchange mutation events2, but place some constraints on the transport layer. (For instance, exactly-once and/or causal delivery, depending on the CmRDT in question.) With CvRDTs, or state-based CRDTs, peers must exchange their full data structures and then merge them locally, placing no constraints on the transport layer but taking up far more bandwidth and possibly CPU time. Both types of CRDT are equivalent and can be converted to either form.

Differential Synchronization

There’s actually one more technique that’s worth discussing, and it’s a bit of an outlier. This is Neil Fraser’s Differential Synchronization. Used in an earlier version of Google Docs before their flavor of OT was implemented, Differential Sync uses contextual diffing between local revisions of documents to generate streams of frequent, tiny edits between peers. If there’s a conflict, the receiving peer uses fuzzy patching to apply the incoming changes as best as possible, then contextually diffs the resulting document with a reproduced copy of the sender’s document (using a cached “shadow copy” of the last seen version) and sends the new changes back. This establishes a sort of incremental sync loop. Eventually, all peers converge on a final, stable document state. Unlike with OT and CRDTs, the end result is not mathematically defined, but instead relies on the organic behavior of the fuzzy patching algorithm when faced with diffs of varying contexts and sizes.

Finding the Best Approach

Going into this problem, my first urge was to adopt Differential Sync. One might complain that this algorithm has too many subjective bits for production use, but that’s exactly what appealed to me about it. Merge is a complicated process that often relies on heuristics entirely separate from the data format. A human would merge two list text files and two prose text files very differently, even though they might both be represented as text. With Differential Sync, all this complex logic is encapsulated in the diff and patch functions. Like git, the system is content-centric in the sense that the patches work directly with the output data and don’t have any hooks into the data structure or code. The implementation of the data format could be refactored as needed, and the diff and patch functions could be tweaked and improved over time, and neither system would have to know about changes to the other. It also meant that the documents in their original form could be preserved in their entirety server-side, synergizing nicely with Dropbox-style cloud backup. It felt like the perfect dividing line of abstraction.

But studying Differential Sync further, I realized that a number of details made it a non-starter. First, though the approach seems simple on the surface, its true complexity is concealed by the implementation of diff and patch. This class of functions works well for strings, but you basically need to be a seasoned algorithms expert to design a set for a new data type. (Worse: the inherent fuzziness depends on non-objective metrics, so you’d only be able to figure out the effectiveness of your algorithms after prolonged use and testing instead of formal analysis.) Second, diff and patch as they currently exist are really meant for loosely-structured data such as strings and images. Barring conversion to text-based intermediary formats, tightly structured objects would be very difficult to diff and patch while maintaining consistency. Next, there are some issues with using Differential Sync in an offline-first environment. Clients have to store their entire diff history while offline, and then, on reconnection, send the whole batch to their peers for a very expensive merge. Assuming that other sites had been editing away in the meantime, distantly-divergent versions would very likely fail to merge on account of out-of-date context info and lose much of the data for the reconnected peer. Finally, Differential Sync only allows one packet at a time to be in flight between two peers. If there are network issues, the whole thing grinds to a halt.

Begrudgingly, I had to abandon the elegance of Differential Sync and decide between the two deterministic approaches. CRDTs raised some troubling concerns, including the impact of per-letter metadata and the necessity of tombstones in most sequence CRDTs. You could end up with a file that looked tiny (or even empty) but was in fact enormous under the hood. However, OT was a no-go right from the start. One, the event-based system would have been untenable to build on top of a simple database like CloudKit. You really needed active servers or peer-to-peer connections for that. And two, I discovered that the few known sequence OT algorithms guaranteed to converge in all cases—the ones with the coveted CP2/TP2 property—ended up relying on tombstones anyway! (If you’re interested, Raph Levien touches on this curious overlap in this article.) So it didn’t really matter which choice I made. If I wanted the resiliency of a provably convergent system, I had to deal with metadata-laden structures that left some trace of their deleted elements.

And with their focus on data over process, CRDTs pointed to a paradigm shift in the network’s role for document collaboration. By turning each document field into a CvRDT and preserving that representation when persisting to storage or sending across the network, you’d find yourself with a voraciously-mergeable document format that completely disentangled the network layer from the sync machinery. You’d be able to throw different revisions of the same document together in any order to obtain the same merge result, never once having to ask anything of the user. Everything would work without quirks in offline mode irrespective of how much time had passed. Data would only need to flow one way, streamed promiscuously to any devices listening for changes. The document would be topology-agnostic to such a degree that you could use it in a peer-to-peer environment, send it between phone and laptop via Bluetooth, share it with multiple local applications, and sync it through a traditional centralized database. All at the same time!

We tend to think of distributed systems as messy, stateful things. This is because most concurrent events acting on the same data require coordination to produce consistent results. By the laws of physics, remote machines are incapable of sending and receiving their changes as soon as they happen, forcing them to participate in busy conversations with their peers to establish a sensible local take on the global data. But a CRDT is always able to merge with its revisions, past or future. This means that all the computation can be pushed to the edges of the network, transforming the tangle of devices, protocols, and connections at the center of it all into a dumb, hot-swappable “transport cloud”. As long as a document finds its way from device A to device B, sync will succeed. In this world, data has primacy and everything else has to orbit around it!

I admit that this revelation made some wily political thoughts cross my mind. Could this be the chance to finally break free from the shackles of cloud computing? It always felt like such an affront that our data had to snake through a tangle of corporate servers in order to reach the devices right next to us. We used to happily share files across applications and even operating systems, and now everything was funneled through these monolithic black boxes. What happened? How did we let computing become so darn undemocratic? It had gotten so bad that we actually expected our content and workflows to regularly vanish as companies folded or got themselves acquired! Our digital assets—some our most valuable property—were entirely under management of outside, disinterested parties.

CRDTs offered our documents the opportunity to manage their own sync and collaboration, wrestling control over data from centralized systems back to its rightful owners. The road here was fresh and unpaved, however, and I had to figure out if I could use these structures in a performant and space-efficient way for non-trivial applications.

The next step was to read through the academic literature on CRDTs. There was a group of usual suspects for the hard case of sequence (text) CRDTs: WOOT, Treedoc, Logoot/LSEQ, and RGA. WOOT is the progenitor of the genre and gives each character in a string a reference its adjacent neighbors on both sides. Recent analysis has shown it to be inefficient compared to newer approaches. Treedoc has a similar early adopter performance penalty. Logoot (which is optimized further by LSEQ) curiously avoids tombstones by treating each element as a unique point along a dense (infinitely-divisible) number line, adopting bignum-like identifiers with unbounded growth for ordering. Unfortunately, it has a problem with interleaved text on concurrent edits. RGA makes each character implicitly reference its leftmost neighbor and uses a hash table to make character lookups efficient. It also features an update operation alongside the usual insert and delete. The paper is annoyingly dense with theory, but the approach often comes out ahead in benchmark comparisons. I also found a couple of recent, non-academic CRDT designs such as Y.js and xi, all of which brought something new to the table but felt rather convoluted in comparison to the good-enough RGA. In almost all cases, conflicts between concurrent changes were resolved by way of a unique origin ID plus a logical timestamp per character. Sometimes, they were discarded when an operation was applied; other times, they persisted even after merge.

Reading through the literature was highly educational, and I now had a good intuition about the behavior of sequence CRDTs. But I just couldn’t find very much in common between the disparate approaches. Each one brought its own operations, proofs, optimizations, conflict resolution methods, and garbage collection schemes to the table. Many of the papers blurred the line between theory and implementation, making it even harder to suss out any underlying principles. I felt confident using these algorithms for convergent arrays, but I wasn’t quite sure how to build my own replicated data structures using the same principles.

Finally, I discovered the one key CRDT that made things click for me.

Causal Trees

A state-based CvRDT, on a high level, can be viewed as a data blob along with a commutative, associative, and idempotent merge function that can always generate a monotonically further-ahead blob from any two. An operation-based CmRDT, meanwhile, can be viewed as a data blob that is mutated and pushed monotonically forward by a stream of commutative-in-effect (and maybe causally-ordered and deduplicated) events. If the CvRDT data blob was simply defined as an ordered collection of operations, could these two techniques be combined? We’d then have the best of both worlds: an eminently-mergeable data structure, together with the ability to define our data model in terms of domain-specific actions!

Let’s build a sequence CvRDT with this in mind. To have some data to work with, here’s an example of a concurrent string mutation.

Site 1 types “CMD”, sends its changes to Site 2 and Site 3, then resumes its editing. Site 2 and 3 then make their own changes and send them back to Site 1 for the final merge. The result, “CTRLALTDEL”, is the most intuitive merge we could expect: insertions and deletions all persist, runs of characters don’t split up, and most recent changes come first.

First idea: just take the standard set of array operations (insert A at index 0, delete index 3, etc.), turn each operation into an immutable struct, stick the structs into a new array in their creation order, and read them back to reconstruct the original array as needed. (In other words, an instance of the event sourcing pattern with the CvRDT serving as the event log.) This won’t be convergent by default since the operations don’t have an inherent total order, but it’s easy to fix this by giving each one a globally-unique ID in the form of an owner UUID3 plus a Lamport timestamp. (In the diagrams below, this is encoded as “SX@TY”, where “SX” represents the UUID for site X, and Y is just the timestamp.) With this scheme, no two operations can have the same ID: operations from the same owner will have different timestamps, while operations from different owners will have different UUIDs. The Lamport timestamps will be used to sort the operations into causal order, leaving the UUIDs for tiebreaking when concurrent operations happen to have the same timestamp. Now, when a new operational array arrives from a remote peer, the merge is as simple as iterating through both arrays and shifting any new operations to their proper spots: an elementary merge sort.

Success: it’s an operation-based, fully-convergent CvRDT! Well, sort of. There are two major issues here. First, the process of reconstructing the original array from the operational array has O(n2) complexity4, and it has to happen every time we merge. Second, intent is completely clobbered. Reading the operations back, we get something along the lines of “CTRLDATLEL” (with a bit of handwaving when it comes to inserts past the array bounds). Just because a data structure converges doesn’t mean it makes a lick of sense! In the earlier OT section, we saw that concurrent index-based operations can be made to miss their intended characters depending on the order. (Recall that this is the problem OT solves by transforming operations, but here our operations are immutable.) In a sense, this is because the operations are specified incorrectly. They make an assumption that doesn’t get encoded in the operations themselves—that an index can always uniquely identify a character—and thus lose the commutativity of their intent when this turns out not to be the case.

OK, so the first step is to fix the intent problem. To do that, we have to strip our operations of any implicit context and define them in absolute terms. Fundamentally, insert A at index 0 isn’t really what the user wants to do. People don’t think in terms of indices. They want to insert a character at the cursor position, which is perceived as being between two letters—or more simply, to the immediate right of a single letter. We can encode this by switching our operations to the format insert Aid after Bid, where each letter in the array is uniquely identified. Given causal order, and assuming that deleted characters persist until any operations that reference them are processed, the intent of these operations is now commutative: there will only ever be that one specific ‘B’ in the array, allowing us to always position ‘A’ just as the user intended.

So how do we identify a particular letter? Just ‘A’ and ‘B’ are ambiguous, after all. We could generate a new ID for each inserted letter, but this isn’t necessary: we already have unique timestamp+UUID identifiers for all our operations. Why not just use the operation identifiers as proxies for their output? In other words, an insert A operation could stand for that particular letter ‘A’ when referenced by other operations. Now, no extra data is required, and everything is still defined in terms of our original atomic and immutable operations.

This is significantly better than before! We now get “CTRLALTDEL” after processing this operational array, correctly-ordered and even preserving character runs as desired. But performance is still an issue. As it stands, the output array would still take O(n2) to reconstruct. The main roadblock is that array insertions and deletions tend to be O(n) operations, and we need to replay our entire O(n) history whenever remote changes come in or when we’re recreating the output array from scratch. Array push and pop, on the other hand, are only O(1) amortized. What if instead of sorting our entire operational array by timestamp+UUID, we positioned operations in the order of their output? This could be done by placing each operation to the right of its causal operation (parent), then sorting it in reverse timestamp+UUID order among the remaining operations5. In effect, this would cause the operational array to mirror the structure of the output array. The result would be identical to the previous approach, but the speed of execution would be substantially improved.

With this new order, local operations require a bit of extra processing when they get inserted into the operational array. Instead of simply appending to the back, they have to first locate their parent, then find their spot among the remaining operations—O(n) instead of O(1). In return, producing the output array is now only O(n), since we can read the operations in order and (mostly) push/pop elements in the output array as we go along6. In fact, we can almost treat this operational array as if it were the string itself, even going as far as using it as a backing store for a fully-functional NSMutableString subclass (with some performance caveats). The operations are no longer just instructions: they have become the data!

(Observe that throughout this process, we have not added any extra data to our operation structs. We have simply arranged them in a more precise causal order than the default timestamp+UUID sort allows, which is possible based on our knowledge of the unique causal characteristics of the data model. For example, no matter how high a timestamp an insert operation might have, we know that the final position of its output in the string is solely determined by its parent together with any concurrent runs of inserts that have a higher timestamp+UUID. Every other operation in timestamp+UUID order between that operation and its parent is irrelevant, even if the Lamport timestamps might conservatively imply otherwise. In other words: the Lamport timestamp serves as a sort of brute force upper bound on causality, but we can arrange the operations much more accurately using domain knowledge.)

Pulled out of its containing array, we can see that what we’ve designed is, in fact, an operational tree—one which happens to be implicitly stored as a depth-first, preorder traversal in contiguous memory. Concurrent edits are sibling branches. Subtrees are runs of characters. By the nature of reverse timestamp+UUID sort, sibling subtrees are sorted in the order of their head operations.

This is the underlying premise of the Causal Tree.

In contrast to all the other CRDTs I’d been looking into, the design presented in Victor Grishchenko’s brilliant paper was simultaneously clean, performant, and consequential. Instead of dense layers of theory and labyrinthine data structures, everything was centered around the idea of atomic, immutable, metadata-tagged, and causally-linked operations, stored in low-level data structures and directly usable as the data they represented. From these attributes, entire classes of features followed.

(The rest of the paper will be describing my own CT implementation in Swift, incorporating most of the concepts in the original paper but sporting tweaks based on further research.)

In CT parlance, the operation structs that make up the tree are called atoms. Each atom has a unique identifier comprised of a site UUID, index, and Lamport timestamp7. The index and timestamp serve the same role of logical clock, and the data structure could be made to work with one or the other in isolation. (The reason to have both is to enable certain optimizations: the index for O(1) atom lookups by identifier, and the timestamp for O(1) causality queries between atoms.) The heart of an atom is its value, which defines the behavior of the operation and stores any relevant data. (Insert operations store the new character to place, while delete operations contain no extra data.) An atom also stores the identifier of its cause, or parent, atom. Generally speaking, this is an atom whose effect on the data structure is a prerequisite for the proper functioning of the child atom. (As explained earlier, in a string CT, the causal link simply represents the character to the left of an insertion or the target of a deletion.)

In Swift code, an atom might look something like this:

struct Id: Codable, Hashable

{

let site: UInt16

let index: UInt32

let timestamp: UInt32

}

struct Atom<T: Codable>: Codable

{

let id: Id

let cause: Id

let value: T

}

While a string value might look like this:

enum StringValue: Codable

{

case null

case insert(char: UInt16)

case delete

// insert Codable boilerplate here

}

typealias StringAtom = Atom<StringValue>

What’s great about this representation is that Swift automatically compresses enums with associated values to their smallest possible byte size, i.e. the size of the largest associated value plus a byte for the case, or even less if Swift can determine that a value type has some extra bits available. Here, the size would be 3 bytes. (In case you’re wondering about the 16-bit “UUID” for the site, I’ve devised a mapping scheme from 16-bit IDs to full 128-bit UUIDs that I’ll explain in a later section.)

For convenience, a CT begins with a “zero” root atom, and the ancestry of each subsequent atom can ultimately be traced back to it. The depth-first, preorder traversal of our operational tree is called a weave, equivalent to the operational array discussed earlier. Instead of representing the tree as an inefficient tangle of pointers, we store it in memory as this weave array. Additionally, since we know the creation order of each atom on every site by way of its timestamp (and since a CT is not allowed to contain any causal gaps), we can always derive a particular site’s exact sequence of operations from the beginning of time. This sequence of site-specific atoms in creation order is called a yarn. Yarns are more of a cache than a primary data structure in a CT, but I keep them around together with the weave to enable O(1) atom lookups. To pull up an atom based on its identifier, all you have to do is grab its site’s yarn array and read out the atom at the identifier’s index.

Storing the tree as an array means we have to be careful while modifying it, or our invariants will be invalidated and the whole thing will fall apart. When a local atom is created and parented to another atom, it is inserted immediately to the right of its parent in the weave. It’s easy to show that this logic preserves the sort order: since the new atom necessarily has a higher Lamport timestamp than any other atom in the weave, it always belongs in the spot closest to its parent. On merge, we have to be a bit more clever if we want to keep things O(n). The naive solution—iterating through the incoming weave and individually sorting each new atom into our local weave—would be O(n2). If we had an easy way to compare any two atoms, we could perform a simple and efficient merge sort. Unfortunately, the order of two atoms is a non-binary relation since it involves ancestry information in addition to the timestamp and UUID. In other words, you can’t write a simple comparator for two atoms in isolation without also referencing the full CT.

Fortunately, we can use our knowledge of the underlying tree structure to keep things simple. (The following algorithm assumes that both weaves are correctly ordered and preserve all their invariants.) Going forward, it’s useful to think of each atom as the head of a subtree in the larger CT. On account of the DFS ordering used for the weave, all of an atom’s descendants are contained in a contiguous range immediately to its right called a causal block. To merge, we compare both weaves atom-by-atom until we find a mismatch. There are three possibilities in this situation: the local CT has a subtree missing from the incoming CT, the incoming CT has a new subtree missing from the local CT, or the two CTs have concurrent sibling subtrees. (Proving that the only possible concurrent change to the same spot is that of sibling subtrees is an exercise left to the reader.) The first two cases are easy to discover and deal with: verify that one of the two atoms appears in the other’s CT and keep inserting or fast-forwarding atoms until the two weaves line up again. For the last case, we have to arrange the two concurrent causal blocks in their correct order. This is pretty simple, too: the end of a causal block can be found based on an algorithm featured in the paper8, while the ultimate order of the blocks is determined by the order of their head atoms. Following any change to the weave, any stored yarns must also be updated.

One more data structure to note is a collection of site+timestamp pairs called a weft, which is simply a fancy name for a version vector. You can think of this as a filter on the tree by way of a cut across yarns: one in which only the atoms with a timestamp less than or equal to their site’s timestamp in the weft are included. Wefts can uniquely identify and split the CT at any point in its mutation timeline, making them very useful for features such as garbage collection and past revision viewing. They give us the unique ability to manipulate the data structure in the time domain, not just the space domain.

A weft needs to be consistent in two respects. First, there’s consistency in the distributed computing sense: causality of operations must be maintained. This is easily enforced by ensuring that the tree is fully-connected under the cut. Second, there’s the domain-dependent definition: the resulting tree must be able to produce an internally-consistent data structure with no invariants violated. This isn’t an issue with strings, but there are other kinds of CT-friendly data where the weave might no longer make sense if the cut is in the wrong place. In the given example, the weft describes the string “CDADE”, providing a hypothetical view of the distributed data structure in the middle of all three edits.

Demo: Concurrent Editing in macOS and iOS

Words, words, words! To prove that the Causal Tree is a useful and effective data structure in the real world, I’ve implemented a generic version in Swift together with a set of demo apps. Please note that this is strictly an educational codebase and not a production-quality library! My goal with this project was to dig for knowledge that might aid in app development, not create another framework du jour. It’s messy, undocumented, a bit slow, and surely broken in some places—but it gets the job done.

From 0:00–0:23, sites 1–3 are created and connect to each other in a ring. From 0:23–0:34, Site 4 and 5 are forked from 1, establish a two-way connection to 1 to exchange peer info, then go back offline. At 0:38, Site 4 connects to 5, which is still not sending data to anyone. At 0:42, Site 5 connects to 1 and Site 1 connects to 4, finally completing the network. At 0:48, all the sites go offline, then return online at 1:06.

The first part of the demo is a macOS mesh network simulator. Each window represents an independent site that has a unique UUID and holds its own copy of the CT. The CTs are edited locally through the type-tailored editing view. New sites must be forked from existing sites, copying over the current state of the CT in the process. Sites can go “online” and establish one-way connections to one or more known peers, which sends over their CT and known peer list about once a second. On receipt, a site will merge the inbound CT into their own. Not every site knows about every peer, and forked sites will be invisible to the rest of the network until they go online and connect to one of their known peers. All of this is done locally to simulate a partitioned, unreliable network with a high degree of flexibility: practically any kind of topology or partition can be set up using these windows. For string editing, the text view uses the CT directly as its backing store by way of an NSMutableString wrapper plugged into a bare-bones NSTextStorage subclass.

You can open up a yarn view that resembles the diagram in the CT paper, though this is only really legible for simple cases. In this view, you can scroll around with the left mouse button and select individual atoms to list their details with the right mouse button.

The three sites are connected in a ring. At 0:43, all sites go offline, then return online at 1:00.

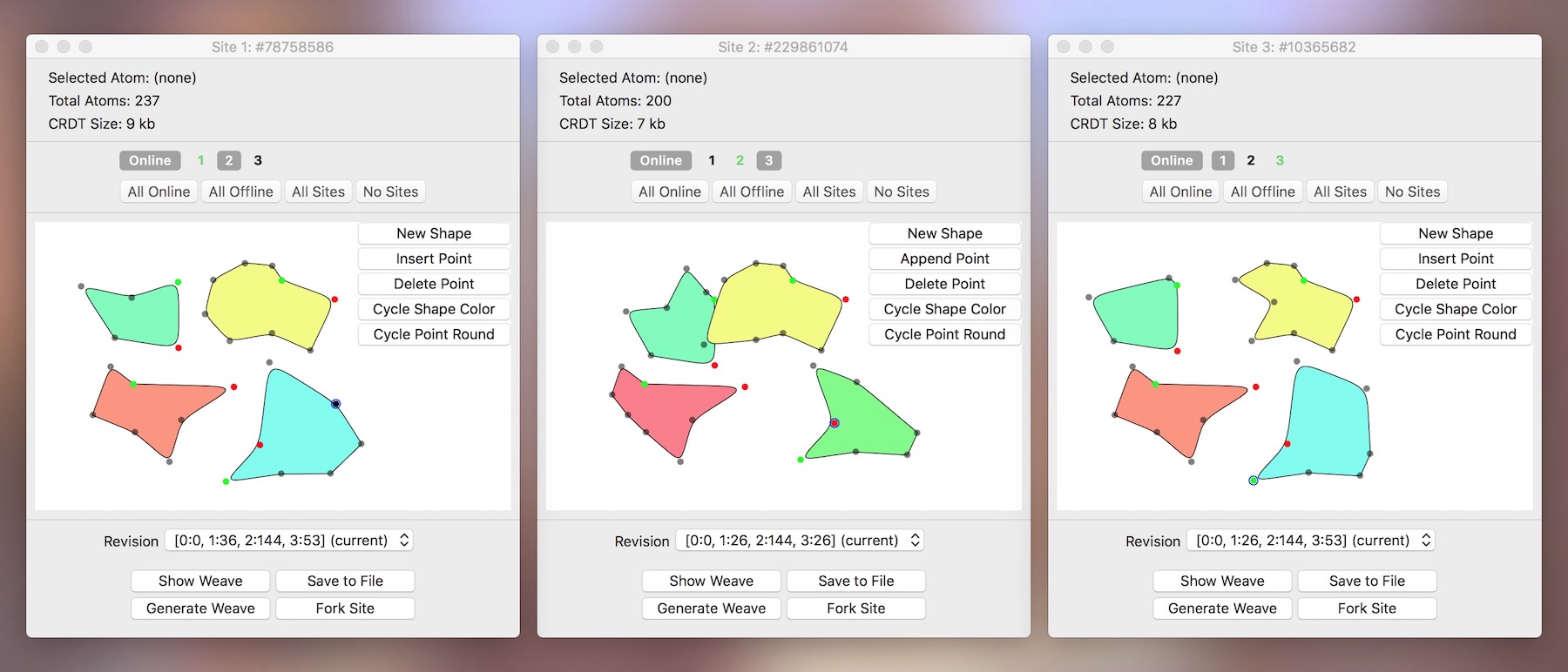

Also included is an example of a CT-backed data type for working with simple vector graphics. Using the editing view, you can create shapes, select and insert points, move points and shapes around, change the colors, and change the contours. Just as before, everything is synchronized with any combination of connected peers, even after numerous concurrent and offline edits. (To get a sense of how to use CTs with non-string data types, read on!)

Each site can display a previously-synced, read-only revision of its document via the dropdown list. Because a CT’s operations are atomic, immutable, and tagged with precise origin metadata, this functionality effectively comes free! And this is only one of many emergent properties of CTs.

The phone on the left shares an iCloud account with the phone in the middle, while the phone on the right is logged in to a different iCloud account and has to receive a CloudKit Share. At 0:29 and 1:21, remote cursor sync is demonstrated, and 1:30–2:00 shows offline use. At 2:15, simultaneous predictive typing is used to demo editing under high concurrency. Apologies for the occasional delays: iCloud is slow and my network code is a mess!

The second part of the demo is a very simple CloudKit-powered text editing app for iOS. Much of it is wonky and unpolished (since I’m very much a CloudKit newbie), but the important part is that real-time collaboration (including remote cursors) works correctly and efficiently whether syncing to the same user account, collaborating with others via CloudKit Sharing, or just working locally for long periods of time. The network layer only deals with binary data blobs and has no insight into the particular structure of the data. Whether local or remote, all conflicts are resolved automatically in the same section of code. Best of all, no extra coordinating servers are required: the dumb CloudKit database works just fine.

My CT implementation isn’t quite production ready (though I’ll keep hammering away for use in my commercial projects), but I think it’s convincing proof that the technique is sound and practical for use with collaborative document-based applications!

Operational Replicated Data Types

Causal Trees, however, are just the beginning: there’s a more universal pattern at work here. Recent research projects, including Victor Grishchenko’s Replicated Object Notation (RON) and the paper Pure Operation-Based Replicated Data Types (hereafter PORDT), have extended the operational paradigm to almost any kind of CRDT, giving us a standard set of tools for designing and analyzing these fascinating data structures.

For the sake of clarity, and since neither of these projects seems terribly concerned with nomenclature, I’m going to be calling this new breed of CRDTs operational replicated data types—partly to avoid confusion with the exiting term “operation-based CRDTs” (or CmRDTs), and partly because “replicated data type” (RDT) seems to be gaining popularity over “CRDT” and the term can be expanded to “ORDT” without impinging on any existing terminology.

PORDT is missing some features that RON includes9, so ORDTs have the most in common with the RON approach.

What Is an ORDT?

Much like Causal Trees, ORDTs are assembled out of atomic, immutable, uniquely-identified and timestamped “operations” which are arranged in a basic container structure. (For clarity, I’m going to be referring to this container as the structured log of the ORDT.) Each operation represents an atomic change to the data while simultaneously functioning as the unit of data resultant from that action. This crucial event–data duality means that an ORDT can be understood as either a conventional data structure in which each unit of data has been augmented with event metadata; or alternatively, as an event log of atomic actions ordered to resemble its output data structure for ease of execution10. Consequently, an ORDT doesn’t necessarily need to be evaluated to be useful: many queries can be run on the structured log as-is11. Whether syncing a single operation, applying a longer patch, or performing a full-on merge, every change to an ORDT is integrated through the same kind of low-level “rebase” inside the container. This means that the distinction between op-based (CmRDT) and state-based (CvRDT) usage becomes effectively moot.

ORDTs are meant to replace conventional data types, and one might wonder if this approach is needlessly bloated compared to one in which the content and history are kept separated12. But in fact, I’d argue that the operational approach is the only one that stays true to nature of the problem. One way or another, every event in a convergent data type needs to be uniquely defined, identified, timestamped, and associated with its output. If these properties aren’t kept together, then the design will require a tight, complex binding between the history and data parts. In effect, the convergence data would become a brittle reflection of the original content. Some new CRDTs (such as the one used in xi) go in this direction, resulting in very complicated rewind and replay code that has to be custom-tailored to each data type and approaches O(n2) complexity with the length of the history log. Meanwhile, ORDTs of practically any variety can readily perform all the same tasks in a generic way with minimal code changes and uniform O(nlogn) complexity!

The decomposition of data structures into tagged units of atomic change feels like one of those rare foundational abstractions that could clarify an entire field of study. Indeed, many existing CRDTs (such as RGA) have made passes at this approach without fully embracing it, incorporating authorship and logical timestamps into proto-operational units that often get consumed during merge. With the ORDT approach, those same CRDTs can be expressed in a general way, unifying their interface and performance characteristics across the board while providing them a wide variety of standard, useful features. RON has working implementations for LWW (last-writer-wins) registers, Causal Trees (under the RGA moniker), and basic sets, while PORDT additionally defines MVRegisters, AWSets, and RWSets.

So what are some of the benefits to the operational approach? For one, satisfying the CRDT invariants becomes trivial. Idempotency and commutativity are inherent in the fact that each ORDT is simply an ordered collection of unique operations, so there’s never any question of what to do when integrating remote changes. Even gapless causal order, which is critical for convergence in most CRDTs, becomes only a minor concern, since events missing their causal ancestors could simply be ignored on evaluation. (RON treats any causally-isolated segments of an ORDT as “patches” that can be applied to the main ORDT as soon as their requisite ancestors arrive.) Serializing and deserializing these structures is as simple as reading and writing streams of operations, making the format perfectly suited for persisting documents to disk. Since operations are simply data, it’s easy to selectively filter or divide them using version vectors. (Uses for this include removing changes from certain users, creating delta patches, viewing past revisions, and setting garbage collection baselines.) The fact that each operation has a globally-unique identifier makes it possible to create deep references from one ORDT into another, or to create permalinks to arbitrary ranges in an ORDT’s edit history. (For instance, the cursor marker in my text editor is defined as a reference to the atom corresponding to the letter on its left.) Finally, the discretized nature of the format gives you access to some powerful version control features, such as “forking” an ORDT while retaining the ability to incorporate changes from the original, or instantly diffing any two ORDTs of the same type.

Chas Emerick puts it best in his article titled “Distributed Systems and the End of the API”:

The change is subtle, but has a tectonic effects. “Operations”, when cast as data, become computable: you can copy them, route them, reorder them freely, manipulate them and apply programs to them, at any level of your system. People familiar with message queues will think this is very natural: after all, producers don’t invoke operations on or connect directly to the consumers of a queue. Rather, the whole point of a queue is to decouple producer and consumer, so “operations” are characterized as messages, and thus become as pliable as any other data.

The ORDT Pipeline

Let’s talk about how this design actually works in practice. To start with, ORDT operations are defined to be immutable and globally unique. Each operation is bestowed, at minimum, with an ID in the form of a site UUID and a Lamport timestamp, a location identifier (which is generally the ID of another operation), and a value. An operation is meant to represent an atomic change in the data structure, local in effect and directly dependent on one other operation at most13. The location field of an operation tends to point to its direct causal prerequisite: the last operation whose output is necessary for it to function. If no such dependency exists, then it could simply point to the newest operation available in the current ORDT, or even to an arbitrary value for sorting purposes. Although each operation only contains a single Lamport timestamp, the location field provides almost as much additional causality and context information as a typical version vector would. This makes it possible to identify and segregate concurrent operations without the untenable O(ns) space complexity of a version vector per operation, as seen in many designs for other distributed systems.

New operations (local and remote) are incorporated into the ORDT through a series of functions. The pipeline begins with an incoming stream of operations, packaged depending on the use case. For an ORDT in CvRDT (state) mode, this would be a state snapshot in the form of another structured log; for CmRDT (operation) mode, any set of causally-linked operations, and often just a single one.

New operations, together with the current structured log, are fed into a reducer (RON) or effect (PORDT) step. This takes the form of a function that inserts the operations into their proper spot in the structured log, then removes any redundant operations as needed.

What are these “redundant operations”, you might ask? Isn’t history meant to be immutable? Generally, yes—but in some ORDTs, new operations might definitively supersede previous operations and make them redundant for convergence. Take a LWW register, for example. In this very basic ORDT, the value of an operation with the highest timestamp+UUID supplants any previous operation’s value. Since merge only needs to compare a new operation with the previous highest operation, it stands to reason there’s simply no point in keeping the older operations around. (PORDT defines these stale operations in terms of redundancy relations, which are unique to each ORDT and are applied as part of the effect step.)

Here, I have to diverge from my sources. In my opinion, the cleanup portion of the reducer/effect step ought to be separated out and performed separately (if at all). Even though some ORDT operations might be made redundant with new operations, retaining every operation in full allows us to know the exact state of our ORDT at any point in its history. Without this assurance, relatively “free” features such as garbage collection and past revision viewing become much harder (if not impossible) to implement in a general way. I therefore posit that at this point in the pipeline, there ought to be a simpler arranger step. This function would perform the same sort of merge and integration as the reducer/effect functions, but it wouldn’t actually remove or modify any of the operations. Instead of happening implicitly, the cleanup step would be explicitly invoked as part of the garbage collection routine, when space actually needs to be reclaimed. The details of this procedure are described in the next section.

(I should note that RON and PORDT additionally clean up operations in the reducer/effect step by stripping some of their metadata when it’s no longer required. For instance, in RON’s implementation of a sequence ORDT, the location ID of an operation is dropped once that operation is properly positioned in the structured log. Grishchenko does emphasize that every RDT currently defined in RON retains enough context information when compressed to allow the full history to be restored, but I think this property would be difficult to prove for arbitrary RDTs. Additionally, I’m generally against this kind of trimming because it ruins the functional purity of the system. The operations become “glued” to their spot in the structured log, and you lose the ability to treat the log and operations as independent entities. Either way, I firmly believe that this kind of cleanup step also belongs in the garbage collection routine.)

The final bit of the pipeline is the mapper (RON) or eval (PORDT) step. This is the code that finally makes sense of the structured log. It can either be a function that produces an output data structure by executing the operations in order, or alternatively, a collection of functions that queries the structured log without executing anything. In the case of string ORDTs, the mapper might simply emit a native string object, or it might take the form of an interface that lets you call methods like length, characterAtIndex:, or even replaceCharactersInRange:withString: directly on the structured log. It’s absolutely critical that the output of each function in this step is equivalent to calling that same function on the data structure resultant from the linearization and execution of every operation in the ORDT. (So evaluating length directly on the operations should be the same as executing all the operations in order, then calling length on the output string.) All issues pertaining to conflict resolution are pushed into this section and become a matter of interpreting the data.

The arranger/reducer/effect and the mapper/eval functions together form the two halves of the ORDT: one dealing with the memory layout of data, the other with its user-facing interpretation. The data half, as manifest in the structured log, needs to be ordered such that queries from the interface half remain performant. If the structured log for an ORDT ends up kind of looking like the abstract data type it’s meant to represent (e.g. a CT’s weave ⇄ array), then the design is probably on the right track. Effectively, the operations should be able to stand for the data.

So how is the structured log stored, anyway? PORDT does not concern itself with the order of operations: all of them are simply stuck in a uniform set. Unfortunately, this is highly inefficient for more complex data types such as sequences, since the set has to be sorted into a CT-like order before each query. RON’s insight is that the order of operations really matters for mapper performance, and so the operations are arranged in a kind of compressed array called a frame. Each operation is defined in terms of a regular language that allows heavy variables such as UUIDs to only appear a minimum number of times in the stream. In both cases, operational storage is generic without any type-specific code. Everything custom about a particular data type is handled in the reducer/effect and mapper/eval functions.

But this is another spot where I have to disagree with the researchers. Rather than treating the structured log and all its associated functions as independent entities, I prefer to conceptualize the whole thing as a persistent, type-tailored object, distributing operations among various internal data structures and exposing merge and query methods through an OO interface. In other words, the structured log, arranger, and parts of the mapper would combine to form one giant object.

The reason is that ORDTs are meant to fill in for ordinary data structures, and sticking operations into a homogeneous container might lead to poor performance depending on the use case. For instance, many text editors now prefer to use the rope data type instead of simple arrays. With a RON-style frame, this transition would be uncomfortable: you’re stuck with the container you’re given, so you’d have to create an extraneous syncing step between the frame and secondary rope structure. But with an object-based ORDT, you could almost trivially switch out the internal data structure for a rope and be on your merry way. In addition, more complex ORDTs might require numerous associated caches for optimal performance, and the OO approach would ensure that these secondary structures stayed together and remained consistent on merge.

That’s all there is to the ORDT approach! Operations are piped in from local and remote sources, arranged in some sort of container, and then executed or queried directly to produce the output data. At a high level, ORDTs are delightfully simple to work with and reason about.

Garbage Collection

(This section is a bit speculative since I haven’t implemented any of it yet, but I believe the logic is sound—if a bit messy.)

Garbage collection has been a sticking point in CRDT research, and I believe that ORDTs offer an excellent foundation for exploring this problem. A garbage-collected ORDT can be thought of as a data structure in two parts: the “live” part and the compacted part. Operations in the live part are completely unadulterated, while operations in the compacted part might be modified, removed, compressed, or otherwise altered to reclaim storage. As we saw earlier, a CT can be split into two segments by way of a version vector, or “weft”. The same applies to any ORDT, and this allows us to store a baseline weft alongside the main data structure to serve as the dividing line between live and compacted operations. Assuming causal order14, any site that receives a new baseline would be obliged to compact all operations falling under that weft in its copy of the ORDT, as well as to drop or orphan any operations that are not included in the weft, but have a causal dependency on any removed operations. (These are usually operations that haven’t been synced in time.)

In effect, the baseline can be thought of as just another operation in the ORDT: one that requires all included operations to pass through that ORDT’s particular garbage collection routine. The trick is making this operation commutative.

In ORDTs, garbage collection isn’t just a matter of removing “tombstone” operations or their equivalent. It’s also an opportunity to drop redundant operations, coalesce operations of the same kind, reduce the amount of excess metadata, and perform other kinds of cleanup that just wouldn’t be possible in a strictly immutable and homogeneous data structure. Although baseline selection has to be done very carefully to prevent remote sites from losing data, the compaction process itself is quite mechanical once the baseline is known. We can therefore work on these two problems in isolation.

Given a baseline, there are two kinds of compaction that can be done. First, there’s “lossless” compaction, which involves dropping operations that no longer do anything and aren’t required for future convergence. (PORDT calls this property of operations causal redundancy and removes any such operations in the effect step. Remember, we split this functionality off to define the arranger step.) In essence, lossless compaction is strictly a local issue, since the only thing it affects is the ability for an ORDT to rewind itself and access past revisions. Nothing else about the behavior of the ORDT has to change. You could even implement this form of compaction without a baseline at all. However, only simpler ORDTs such as LWW registers tend to have operations with this property.

The second kind of compaction actually involves making changes to existing operations. The behavior of this step will vary from ORDT to ORDT. A counter ORDT could combine multiple add and subtract operations into a single union operation. A sequence ORDT could remove its deleted operations, then modify any descendants of those operations to ensure that they remain correctly sorted even without their parents. Since modifying existing operations can easily cause corruption, it’s essential to follow two basic rules when figuring out how to apply this kind of “lossy” compaction to an ORDT. First, the compacted portion of the ORDT must be able to produce the same output as its constituent operations. And second, the compacted portion of the ORDT must retain enough metadata to allow future operations to reference it on an atomic level and order themselves correctly. From the outside, a compacted ORDT must continue to behave exactly the same as a non-compacted ORDT.

There are many possible ways to implement compaction. One approach is to freeze all the operations included in the baseline into an ordered data structure and separate it out from the rest of the structured log. Depending on the ORDT in question, it might be possible to strip some of the operation metadata or even store those compacted operations as the output data type itself. (This is the approach utilized by the PORDT authors.) However, there may be performance penalties and headaches from having the data split into two parts like that, especially if spatial locality and random access are required for efficient mapper/eval performance. Merging two garbage-collected ORDTs might also become a problem if neither baseline is a strict superset of the other.

An alternative is to keep the compacted operations mixed in with the live operations, but exceptional care must be taken to ensure that every operation remains in its proper spot following compaction. For example, in a CT, blindly removing a deleted operation that falls under a baseline would orphan any non-deleted child operations that it might have. Naively, one might think that these children could simply be modified to point at the deleted operation’s parent (i.e. their grandparent), but this would change their sort order with respect to the parent’s siblings. (In other words, even “tombstone” operations in a CT serve an important organizational role.) One solution would be to only allow the removal of operations without any children, making several passes over the baselined operations to ensure that all possible candidates are removed. The first pass would remove just the delete operations (since they’re childless) and add a delete flag to their target operations. (So in our Swift implementation, the enum type of the StringAtom might change from insert to something like deleted-insert.) The second pass, starting from the back of the deleted range, would remove any marked-as-deleted operations that have no additional children. And as an extra tweak, if a marked-as-deleted operation’s only child happened to be another marked-as-deleted operation, then the child operation could take the place of its parent, overwriting the parent’s contents with its own. Using this technique, most deletes under a baseline could be safely removed without changing the behavior, output, or storage of a CT.

Baseline selection is where things get tricky. When picking a baseline, every operation possibly affected by the removal of an operation (such as the children of a deleted operation in a CT) must be included as well. Locally, this is easy to do; but the big risk is that if a removed operation has any unknown descendants on some remote site, then those operations will be orphaned if the baseline fails to include them. With some ORDTs, this may be acceptable. If an object in a JSON ORDT is deleted out from under a site with pending changes to that object, then no harm is done: the delete would have obviated those changes even if they were synced. But in a CT, each deleted character continues to serve a vital structural role for ordering adjacent characters, and a hasty removal could cause arbitrary substrings to disappear on remote sites. We can mitigate this problem by first stipulating that no new operations may be knowingly parented to deleted characters, and that no delete operations may have any children or be deleted themselves. (This is the expected behavior anyway since a user can’t append a character to a deleted character through a conventional text editor interface, but it should be codified programmatically.) With these preconditions in place, we know that once a site has received a delete operation, it will never produce any new children for that deleted character. We therefore know that once every site in the network has seen a particular delete operation and its causal ancestors—when that delete operation is stable—that no new operations affected by that delete will ever appear in the future, and that a baseline could in theory be constructed that avoids orphaning any operations across the network. (PORDT uses similar logic for its stable step, which comes after the effect step and cleans up any stable operations provably delivered to all other sites.)

But here’s where we hit a snag. Generally speaking, CRDT research is tailored to the needs of distributed systems and frequently makes architectural concessions in that direction. Perhaps the amount of sites is assumed to be known; perhaps there’s an expected way to determine the causal stability of an operation; or perhaps the protocols for communication between sites are provided. But in my own exploration of CRDTs, no such assumptions have been made. The objects described in this article are pure, system-agnostic data structures, completely blind to the ways in which operations get from one site to another. Even inter-device communication isn’t a hard requirement! Someone could leave a copy of their ORDT on an office thumb drive, return a year later, and successfully merge all the new changes back into their copy. So whenever any additional bit of synchronization is mandated or assumed, the possibility space of this design shrinks and generality is lost. The messiness of time and state are injected into an otherwise perfectly functional architecture.

Unfortunately, baseline selection might be the one component where a bit of coordination is actually required.

In an available and partition-tolerant system system, is it possible to devise a selection scheme that always garbage collects without orphaning any operations? Logically speaking, no: if some site copies the ORDT from storage and then works on it in isolation, there’s no way the other sites will be able to take it into account when picking their baseline. However, if we require our system to only permit forks via request to an existing site, and also that all forked sites ACKs back to their origin site on successful initialization, then we would have enough constraints to make non-orphaning selection work. Each site could hold a map of every site’s ID to its last known version vector. When a fork happens (and is acknowledged), the origin site would add the new site ID to its own map and seed it with its timestamp. This map would be sent with every operation or state snapshot between sites and merge into the receiver’s map alongside the ORDT. (In essence, the structure would act as a distributed summary of the network.) Now, any site with enough information about the others would be free to independently set a baseline that a) is causally consistent, b) is consistent by the rules of the ORDT, c) includes only those removable operations that have been received by every site in the network, and d) also includes every operation affected by the removal of those operations. With these preconditions in place, you can prove that even concurrent updates of the baseline across different sites will converge.

But questions still remain. For instance: what do we do if a site simply stops editing and never returns to the network? It would at that point be impossible to set the baseline anywhere in the network past the last seen version vector from that site. Now some sort of timeout scheme has to be introduced, and I’m not sure this is possible to do in a truly partitioned system. There’s just no way to tell if a site has left forever or if it’s simply editing away in its own parallel partition. So we’d have to add some sort of mandated communication between sites, or perhaps some central authority to validate connectivity, and now the system is constrained even further. In addition, as an O(s2) space complexity data structure, the site-to-version-vector map could get unwieldy depending on the number of peers.

Alternatively, we might relax rules c) and d) and allow the baseline to potentially orphan remote operations. With this scheme, we would retain a sequence of baselines15 associated with our ORDT. Any site would be free to pick a new baseline that was explicitly higher than the previous highest baseline, taking care to pick one that had the highest chance of preserving operations on other sites16. Then, any site receiving new baselines in the sequence would be required to apply them in order. Upon receiving and executing a baseline, a site that had operations causally dependent on removed operations but not themselves included in the baseline would be obliged to either drop them or to add them to some sort of secondary “orphanage” ORDT.

But even here we run into problems with coordination. If this scheme worked as written, we would be a-OK, so long as sites were triggering garbage collection relatively infrequently and only during quiescent moments (as determined to the best of a site’s ability). But we have a bit of an issue when it comes to picking monotonically higher baselines. What happens if two sites concurrently pick new baselines that orphan each others’ operations?

Assume that at this point in time, Site 2 and Site 3 don’t know about each other and haven’t received each other’s operations yet. The system starts with a blank garbage collection baseline. Site 2 decides to garbage collect with baseline 1:3–2:6, leaving behind operations “ACD”. Site 3 garbage collects with baseline 1:3–3:7, leaving operations “ABE”. Meanwhile, Site 1—which has received both Site 2 and 3’s changes—decides to garbage collect with baseline 1:3–2:6–3:7, leaving operations “AED”. So what do we do when Site 2 and 3 exchange messages? How do we merge “ACD” and “ABE” to result in the correct answer of “AED”? In fact, too much information has been lost: 2 doesn’t know to delete C and 3 doesn’t know to delete B. We’re kind of stuck.

(I have to stress that baseline operations must behave like ordinary ORDT operations, in the sense that they have to converge to the same result regardless of their order of arrival. If they don’t, our CRDT invariants break and eventual consistency falls out of reach!)

In this toy example, it may still be possible to converge by drawing inferences about the missing operations from the baseline version vector of each site. But that trick won’t work with more devious examples featuring multiple sites deleting each others’ operations and deletions spanning multiple adjacent operations. Maybe there exists some clever scheme which can bring us back to the correct state with any combination of partial compactions, but my hunch is that this situation is provably impossible to resolve in a local way without accruing ancestry metadata—at which point you’re left with the same space complexity as in the non-compacted case anyway.

Therefore—just as with the non-orphaning baseline strategy—it seems that the only way to make this work is to add a bit of coordination. This might take the form of:

- Designating one or more sites superusers and making them decide on the baselines for all other sites.

- Putting the baseline to vote among a majority/plurality of connected sites.